Trust in leaders is central to citizen compliance with public policies. One potential determinant of trust is how leaders resolve conflicts between utilitarian and non-utilitarian ethical principles in moral dilemmas. Past research suggests that utilitarian responses to dilemmas can both erode and enhance trust in leaders: sacrificing some people to save many others (‘instrumental harm’) reduces trust, while maximizing the welfare of everyone equally (‘impartial beneficence’) may increase trust. In a multi-site experiment spanning 22 countries on six continents, participants (N = 23,929) completed self-report (N = 17,591) and behavioural (N = 12,638) measures of trust in leaders who endorsed utilitarian or non-utilitarian principles in dilemmas concerning the COVID-19 pandemic. Across both the self-report and behavioural measures, endorsement of instrumental harm decreased trust, while endorsement of impartial beneficence increased trust. These results show how support for different ethical principles can impact trust in leaders, and inform effective public communication during times of global crisis.

The Stage 1 protocol for this Registered Report was accepted in principle on 13 November 2020. The protocol, as accepted by the journal, can be found at https://doi.org/10.6084/m9.figshare.13247315.v1.

During times of crisis, such as wars, natural disasters or pandemics, citizens look to leaders for guidance. Successful crisis management often depends on mobilizing individual citizens to change their behaviours and make personal sacrifices for the public good 1 . Crucial to this endeavour is trust: citizens are more likely to follow official guidance when they trust their leaders 2 . Here, we investigate public trust in leaders in the context of the COVID-19 pandemic, which continues to threaten millions of lives around the globe at the time of writing 3,4 .

Because the novel coronavirus is highly transmissible, a critical factor in limiting pandemic spread is compliance with public health recommendations such as social distancing, physical hygiene and mask wearing 5,6 . Trust in leaders is a strong predictor of citizen compliance with a variety of public health policies 7,8,9,10,11,12 . During pandemics, trust in experts issuing public health guidelines is a key predictor of compliance with those guidelines. For example, during the avian influenza pandemic of 2009 (H1N1), self-reported trust in medical organizations predicted self-reported compliance with protective health measures and vaccination rates 13,14 . During the COVID-19 pandemic, data from several countries show that public trust in scientists, doctors and the government is positively associated with self-reported compliance with public health recommendations 15,16,17,18 . These data suggest that trust in leaders is likely to be a key predictor of long-term success in containing the COVID-19 pandemic around the globe. However, the factors that determine trust in leaders during global crises remain understudied.

One possible determinant of trust in leaders during a crisis is how they resolve moral dilemmas that pit distinct ethical principles against one another. The COVID-19 pandemic has raised particularly stark dilemmas of this kind, for instance whether to prioritize young and otherwise healthy people over older people and those with chronic illnesses when allocating scarce medical treatments 19,20 . This dilemma and similar others highlight a tension between two major approaches to ethics. Consequentialist theories – of which utilitarianism is the most well-known exemplar 21 – posit that only consequences should matter when making moral decisions. Because younger, healthier people are more likely to recover and have longer lives ahead of them, utilitarians would argue that they should be prioritized for care because this is likely to produce the best overall consequences 22,23,24 . In contrast, non-utilitarian theories of morality, such as deontological theories 25,26,27,28,29 , argue that morality should consider more than just consequences, including rights, duties and obligations (see Supplementary Note 1 for further details). Non-utilitarians, on deontological grounds, could argue that everyone who is eligible (for example, by being a citizen and/or contributing through taxes or private health insurance) has an equal right to receive medical care, and therefore it is wrong to prioritize some over others 30 . While it is unlikely that ordinary citizens explicitly think about moral issues in terms of specific ethical theories 21,31 , past work shows that these philosophical concepts explain substantial variance in the moral judgements of ordinary citizens 32,33 , including those in the context of the COVID-19 pandemic 34 .

There is robust evidence that people who endorse utilitarian principles in sacrificial dilemmas – deeming it morally acceptable to sacrifice some lives to save many others – are seen as less moral and trustworthy, chosen less frequently as social partners and trusted less in economic exchanges than people who take a non-utilitarian position and reject sacrificing some to save many 35,36,37,38,39,40 . This suggests that leaders who take a utilitarian approach to COVID-19 dilemmas will be trusted less than leaders who take a non-utilitarian approach. Anecdotally, some recent case studies of public communications are consistent with this hypothesis. In the United States, for example, public discussions around whether to reopen schools and the economy versus remain in lockdown highlighted tensions between utilitarian approaches and other ethical principles, with some leaders stressing an imperative to remain in lockdown to prevent deaths from COVID-19 (consistent with deontological principles) but others arguing that lockdown also has costs and these need to be weighed against the costs of pandemic-related deaths (consistent with utilitarian principles; Supplementary Note 2). Those who appealed to utilitarian arguments – such as President Donald Trump, who argued “we cannot let the cure be worse than the problem itself” 41 and Texas Lieutenant Governor Dan Patrick, who suggested that older Americans might be “willing to take a chance” on their survival for the sake of their grandchildrens’ economic prospects 42 – were met with widespread public outrage 43 . Likewise, when leaders in Italy suggested prioritizing young and healthy COVID-19 patients over older patients when ventilators became scarce, they were intensely criticized by the public 44 . Mandatory contact tracing policies, which have been proposed on utilitarian grounds, have also faced strong public criticisms about infringement of individual rights to privacy 45,46,47 .

While past research and recent case studies suggest that utilitarian approaches to pandemic dilemmas are likely to erode trust in leaders, other evidence suggests this conclusion may be premature. First, some work shows that utilitarians are perceived as more competent than non-utilitarians 38 , and to the extent that trust in leaders is related to perceptions of their competence 2 , it is possible that utilitarian approaches to pandemic dilemmas will increase rather than decrease trust in leaders. Second, utilitarianism has at least two distinct dimensions: it permits harming innocent individuals to maximize aggregate utility (‘instrumental harm’), and it treats the interests of all individuals as equally important (‘impartial beneficence’) 21,33 . Indeed, preliminary evidence suggests these two dimensions characterize the way ordinary people think about moral dilemmas in the context of the COVID-19 pandemic 34 . These two dimensions of utilitarianism not only are psychologically distinct in the general public 33 but also have distinct impacts on perception of leaders. Specifically, when people endorse (versus reject) utilitarian principles in the domain of instrumental harm they are seen as worse political leaders, but in some cases are seen as better political leaders when they endorse utilitarian principles in the domain of impartial beneficence 37 .

Another dilemma that pits utilitarian principles against other non-utilitarian principles – this time in the domain of impartial beneficence – is whether leaders should prioritize their own citizens over people in other countries when allocating scarce resources. The utilitarian sole focus on consequences mandates a strict form of impartiality: the mere fact that someone is one’s friend (or their mother or fellow citizen) does not imply that they have any obligations to such a person that they do not have to any and all persons 48 . Faced with a decision about whether to help a friend (or family member or fellow citizen) or instead provide an equal or slightly larger benefit to a stranger, this strict utilitarian impartiality means that one cannot morally justify favouring the person closer to them. In contrast, many non-utilitarian approaches explicitly incorporate these notions of special obligations, recognizing the relationships between people as morally significant. Here, President Trump went against utilitarian principles when he ordered a major company developing personal protective equipment (PPE) to stop distributing it to other countries who needed it 49 , or when he ordered the US government to buy up all the global stocks of the COVID-19 treatment remdesivir 50 . His actions generated outrage across the world and stood in contrast to statements from many other Western leaders at the time. The Prime Minister of the UK, Boris Johnson, for example, endorsed impartial beneficence when he argued for the imperative to “ensure that the world’s poorest countries have the support they need to slow the spread of the virus” (3 June 2020) 51 . In a similar vein, the Dutch government donated 50 million euros to the Coalition for Epidemic Preparedness Innovations, an organization that aims to distribute vaccines equally across the world 52 .

In sum, public trust in leaders is likely to be a crucial determinant of successful pandemic response and may depend in part on how leaders approach the many moral dilemmas that arise during a pandemic. Utilitarian responses to such dilemmas may erode or enhance trust relative to non-utilitarian approaches, depending on whether they concern instrumental harm or impartial beneficence. Past research on trust and utilitarianism is insufficient to understand how utilitarian resolutions to moral dilemmas influence trust during the COVID-19 pandemic – and future crises – for several reasons. First, it has relied on highly artificial moral dilemmas, such as the ‘trolley problem’ 53,54 , that most people have not encountered in their daily lives. Thus, the findings of past studies may not generalize to the context of a global health crisis, where everyone around the world is directly impacted by the moral dilemmas that arise during a pandemic. Second, because the vast majority of previous work on trust in utilitarians has focused on instrumental harm, we know little about how impartial beneficence impacts trust. Third, most previous work on this topic has focused on trust in ordinary people. However, there is evidence that utilitarianism differentially impacts perceptions of ordinary people and leaders 37,38,40 , which means we cannot generalize from past research on trust in utilitarians to a leadership context. Because leaders have power to resolve moral dilemmas through policymaking, and therefore can have far more impact on the outcomes of public health crises than ordinary people can, it is especially important to understand how leaders’ approaches to moral dilemmas impact trust. Finally, past work on inferring trust from moral decisions has been conducted in just a handful of Western populations – in the United States, Belgium, and Germany – and so may not generalize to other countries that are also affected by the COVID-19 pandemic. We need, therefore, to assess cross-cultural stability by testing this hypothesis in different countries around the world. Indeed, given observations of cultural variation in the willingness to endorse sacrificial harm 32 , it is not a foregone conclusion that utilitarian decisions will impact trust in leaders universally. For further details of how the present work advances our understanding of moral dilemmas and trust in leaders, see Supplementary Notes 3–5.

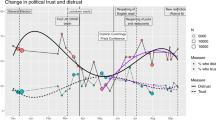

The goal of the current research is to test the hypothesis that endorsement of instrumental harm would decrease trust in leaders while endorsement of impartial beneficence would increase trust in leaders, in the context of the COVID-19 pandemic. Testing this hypothesis across a diverse set of 22 countries spanning six continents (Fig. 1a and Supplementary Fig. 1) in November–December 2020, we aim to inform how leaders around the globe can communicate with their constituencies in ways that will preserve trust during global crises. Given the public health consequences of mistrust in leaders 7,8,9 , if our hypothesis is confirmed, leaders may wish to carefully consider weighing in publicly on moral dilemmas that are unresolvable with policy, because their opinions might erode citizens’ trust in other pronouncements that may be more pressing, such as advice to comply with public health guidelines.

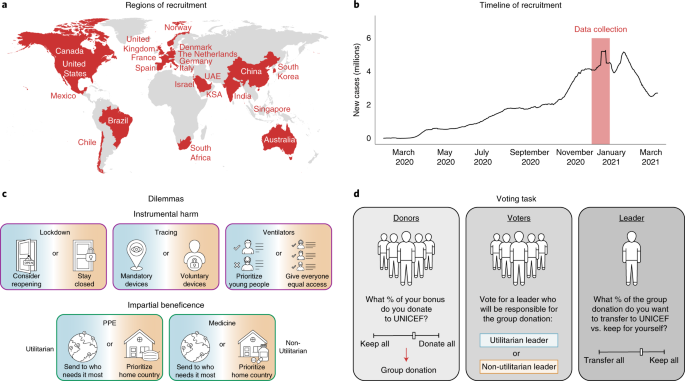

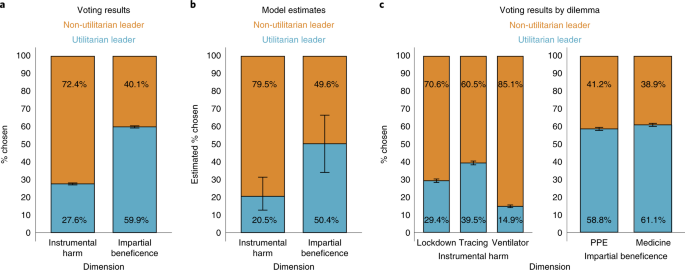

To test our hypothesis empirically, we drew on case studies of public communications to identify five moral dilemmas that have been actively debated during the COVID-19 pandemic (Fig. 1c). Three of these dilemmas involve instrumental harm: the Ventilators dilemma concerns whether younger individuals should be prioritized to receive intensive medical care over older individuals when medical resources such as ventilators are scarce 23,44 , the Lockdown dilemma concerned whether to consider reopening schools and the economy or remain in lockdown 23,55 and the Tracing dilemma concerned whether it should be mandatory for residents to carry devices that continuously trace the wearer’s movements, allowing the government to immediately identify people who have potentially been exposed to the coronavirus 45,46,47 . The other two dilemmas involved impartial beneficence: the PPE dilemma concerned whether PPE manufactured within a particular country should be reserved for that country’s citizens under conditions of scarcity, or sent where it is most needed 23,56,57,58 , and the Medicine dilemma concerned whether a novel COVID-19 treatment developed within a particular country should be delivered with priority to that country’s citizens, or shared impartially around the world 56,59,60 . Participants in our studies read about leaders who endorsed either utilitarian or non-utilitarian solutions to the dilemmas (Table 1) and subsequently completed behavioural and self-report measures of trust in the respective leaders (Extended Data Fig. 1). For example, some read about a leader who endorsed prioritizing younger over older people for scarce ventilators and were then asked how much they trusted that leader. While there are many similar dilemmas potentially relevant to the COVID-19 crisis, we chose to focus on the five described above because they (1) have been publicly debated at time of writing, and (2) apply to all countries in our planned sample. For further details of why we chose these specific dilemmas and how they can test our theoretical predictions, see Supplementary Notes 2 and 6–9.

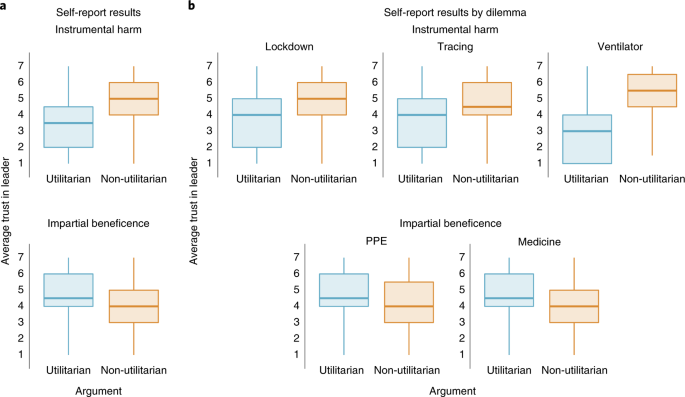

To examine participants’ self-reported trust in the leaders, we fitted a linear mixed-effects model of the effect of argument type (utilitarian versus non-utilitarian), dimension type (instrumental harm versus impartial beneficence) and their interaction on the composite score of trust, adding demographic variables (gender, age, education, subjective socio-economic status (SES), political ideology and religiosity) and policy support as fixed effects and dilemmas and countries as random intercepts, with participants nested within countries (for details, see Analysis plan for hypothesis testing). As specified in Analysis plan, we also ran a model that included countries as random slopes of the two main effects and the interactive effect; the results were consistent with the simpler model, but due to convergence issues with the more complex model, we report the simpler model.

To examine participants’ trust in the leaders as demonstrated by their voting behaviour, we fitted a generalized linear mixed-effects model with the logit link of the effect of dimension type (instrumental harm versus impartial beneficence) on leader choice in the voting task (utilitarian versus non-utilitarian), adding demographic variables (gender, age, education, subjective SES, political ideology and religiosity) and policy support as fixed effects and dilemmas and countries as random intercepts, with participants nested within countries (for details, see Analysis plan for hypothesis testing). This yielded a singular fit, so following our analysis plan, we reduced the complexity of the random-effects structure by only including dilemmas and countries as random intercepts. As specified in Analysis plan, we also ran a model that included countries as random slopes of the effect of dimension type; the results were consistent with the simpler model, but due to singularity issues (both with and without participants nested within countries), we report the simpler model.

Based on suggestions that logit and linear models should converge and that linear models can in some cases be preferable 63,64 , we had also pre-registered the same analysis using a linear model (instead of a model with the logit link) with the identical fixed- and random-effects structures. However, the linear model yielded non-significant results for the main effect of dimension type with our Bonferroni-corrected alpha (B = 0.18, s.e. 0.05, t(3) = 3.73, P = 0.034, CI [0.07, 0.30]; probability of choosing utilitarian leader in instrumental harm dilemmas 0.30, s.e. 0.03, CI [0.16, 0.45], in impartial beneficence dilemmas 0.49, s.e. 0.04, CI [0.31, 0.67]). This discrepancy was unusual, since binomial and linear approaches most often give converging results 65,66 . Following our pre-registered analysis plan, we followed up on this non-significant result using the two one-sided tests (TOST) procedure to differentiate between insensitive versus null results. Given the equivalence bounds set by our smallest effect size of interest (SESOI) (ΔL = −0.15 and ΔU = 0.15; Power analysis), the effect of dimension on leader choice (a 32% difference) was statistically not equivalent to zero (z = 20.77, P = 1.000 for the test with ΔU). This analysis, however, does not take into account the covariates specified in the models.

To resolve the discrepancy between our pre-registered binomial and linear models, we ran a number of additional exploratory models. These are described in Exploratory analyses section and summarized in Table 3.

Table 3 Results for voting task modelsFollowing our analysis plan, we verified the robustness of our findings in several ways. First, due to the changes in country-specific lockdown policies that were implemented between pre-registration and data collection, we ran a variation of our models which omitted the Lockdown dilemma. The results were substantially unchanged, both for the self-report task (interaction between argument and dimension type: B = 2.26, s.e. 0.05, t(17,640) = 48.56, P < 0.001, CI [2.16, 2.37]) and the voting task (main effect for dimension type in binomial model: B = 1.29, s.e. 0.39, z = 3.33, P < 0.001, CI [0.06, 2.52], OR 3.63) tasks.

As noted above, our main pre-registered analysis for the voting task was a generalized linear mixed-effects model with the logit link of the effect of dimension type (instrumental harm versus impartial beneficence) on the leader choice (utilitarian versus non-utilitarian), with demographics and participants’ own policy preferences as fixed effects and dilemmas and countries as random intercepts (Table 2). This analysis confirmed our predictions, but we had also pre-registered the same analysis using a linear model (instead of logit link) with the identical fixed- and random-effects structure. As described above, the results from this model did not pass our pre-registered Bonferroni-corrected significance threshold. This discrepancy was unusual, given prior reports that linear and binomial models yield identical results in the vast majority of cases 63,66 . As a first check on this discrepancy, we assessed the fits of the binomial and linear models by fitting each with half the data, and predicting the leader choices in the remaining half. The mean difference between the predicted and observed values was lower in the binomial model (mean error 0.25) compared with the linear model (mean error 0.27; t(6,318) = −32.53, P < 0.001), suggesting that the binomial model is a better fit to our data.

Next, we ran a series of follow-up analyses to supplement our pre-registered, theoretically informed models. There are a variety of opinions for how to best level complex nested binary data like ours. For example, while random effects aid generalizability 67 , some advocate for modelling country variables as fixed rather than random effects to prevent increases in model bias 68,69 or overly complex random-effects structures 70 . Moreover, while controlling for demographic variables is important for generalizability of our findings, some advocate for minimal use of covariates to prevent type 1 error inflation 71 . Due to the discrepancy in the theoretically justified models that we had pre-registered and ongoing debates over the specifications of modelling such complex data, we ran a variety of models (described in detail in Supplementary Results and summarized in Table 3) with different link functions and different specifications of fixed and random effects, as well as robust random effects and randomization inference. Overall, all models led to the same conclusion: participants voted for the non-utilitarian leader more than the utilitarian leader in dilemmas about instrumental harm, but the reverse in impartial beneficence dilemmas, with the utilitarian leader trusted more than the non-utilitarian leader – suggesting that the discrepancy between our pre-registered binomial and linear models was due to an overly complex random-effects structure.

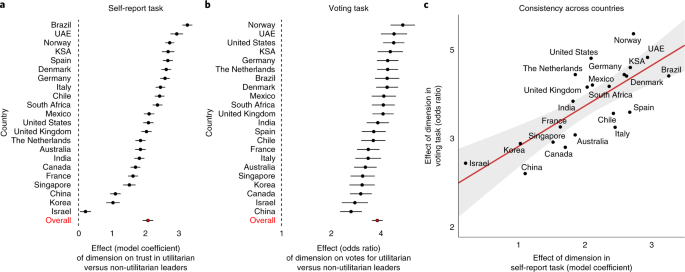

To explore cross-cultural variation in trust in utilitarian versus non-utilitarian leaders, we ran additional models with country as a random slope and extracted the coefficients of interest (Fig. 4a,b). For the self-report task, we conducted a linear mixed-effects model of the effect of argument type (utilitarian versus non-utilitarian), dimension type (instrumental harm versus impartial beneficence) and their interaction on the composite score of trust, adding demographic variables (gender, age, education, subjective SES, political ideology and religiosity) and policy support as fixed effects and countries as a random slope of the interactive effect of argument and dimension. First, we confirmed that there was a significant interaction between argument and dimension type (B = 2.08, s.e. 0.16, t(21) = 13.08, P < 0.001, CI [1.71, 2.45]), consistent with our pre-registered model. Next, we extracted the interaction coefficients for each country, as well as the standard errors of the coefficients, with the estimates plotted in Fig. 4a. While there were some variations in the effect sizes, the results were remarkably consistent across countries. The predicted pattern of results was observed in all 22 countries, with Israel, South Korea and China showing the smallest effects and Brazil, the UAE and Norway showing the largest effects.

For the voting task, we conducted a generalized linear mixed-effects model with the logit link of the effect of dimension type (instrumental harm versus impartial beneficence) on leader choice (utilitarian versus non-utilitarian), adding demographic variables (gender, age, education, subjective SES, political ideology and religiosity) and policy support as fixed effects and countries as a random slope of dimension. First, we confirmed there was a significant main effect for dimension type (B = 1.34, s.e. 0.07, z = 17.88, P < 0.001, CI [1.16, 1.51], OR 3.81), as in our pre-registered model. Next, we extracted the coefficients for each country, as well as the standard errors of the coefficients, and exponentiated them to get the odds ratios, with the resulting estimates plotted in Fig. 4b. Again, the results were remarkably consistent with the predicted pattern of results seen across all 22 countries, with China, Israel and Canada showing the smallest effects and Norway, the UAE and the United States showing the largest effect size.

The self-report and behavioural tasks employed in the current study are highly complementary in several ways: for example, the former is more generalizable across different situations, while the latter is incentivized and more concrete (see Supplementary Note 10 for further details). To ensure that despite their superficial differences the tasks targeted the same construct, that is, trust in leaders, and measured robust preferences across countries, we checked that the effects of moral arguments and utilitarian dimensions on these measures were correlated across countries. Indeed, we found that the coefficients of the interaction between moral argument and moral dimension on trust in the self-report task were significantly correlated with the effect of moral dimension on leader choice in the voting task (r = 0.76, P < 0.001; Fig. 4c).

The main analyses reported above were performed on a subset of participants who passed the comprehension checks, as per our pre-registered sampling plan (criterion 5; see Sampling plan). For the voting task, the observed pass rate (53.26%) was lower than the pre-registered expected pass rate (60%), suggesting that the comprehension check may have been overly stringent. Therefore, we conducted additional analyses to explore whether this pre-registered exclusion criterion might have affected the generalizability of our results across the study population in terms of education level.

The COVID-19 pandemic has raised a number of moral dilemmas that engender conflicts between utilitarian and non-utilitarian ethical principles. Building on past work on utilitarianism and trust, we tested the hypothesis that endorsement of utilitarian solutions to pandemic dilemmas would impact trust in leaders. Specifically, in line with suggestions from previous work and case studies of public communications during the early stages of the pandemic, we predicted that endorsing instrumental harm would decrease trust in leaders, while endorsing impartial beneficence would increase trust. Experiments conducted during November–December 2020 in 22 countries across six continents (total N = 23,929; valid sample for self-report task 17,591; valid sample for behavioural task 12,638) provided robust support for our hypothesis. In the context of five realistic pandemic dilemmas, participants reported lower trust in leaders who endorsed instrumental sacrifices for the greater good and higher trust in leaders who advocated for impartially maximizing the welfare of everyone equally. In a behavioural measure of trust, only 28% of participants preferred to vote for a utilitarian leader who endorsed instrumental harm, while 60% voted for an impartially beneficent utilitarian leader. These findings were robust to controlling for a variety of demographic characteristics as well as participants’ own policy preferences regarding the dilemmas. Although we observed some variation in effect sizes across the countries we sampled, the overall pattern of results was highly robust across countries. Our results suggest that endorsing utilitarian approaches to moral dilemmas can both erode and enhance trust in leaders across the globe, depending on the type of utilitarian morality.

We designed our set of dilemmas to rule out several alternative explanations for our findings, such as a general preference for less restrictive leaders (Supplementary Note 7), leaders who treat everyone equally (Supplementary Note 8) and leaders who seek to minimize COVID-19-related deaths (Supplementary Note 9). In addition, all of our results survived planned robustness checks to account for the possibility that local policies related to lockdowns or contact tracing could bias participants’ responses. Post hoc analyses demonstrated that our findings were highly consistent across the different dilemmas for instrumental harm (Lockdown, Tracing and Ventilators) and impartial beneficence (Medicine and PPE).

While the robustness of our findings across countries speaks to their broad cultural generalizability, further work is needed to understand the observed variations in effect sizes across countries. It seems plausible that both economic (for example, gross domestic product or socio-economic inequality) and cultural (for example, social network structure) differences across countries could explain some of the observed variations. One possibility, for example, is that country-level variations in tightness–looseness 72 , which have been associated with countries’ success in limiting cases in the COVID-19 pandemic 73 , might moderate the effects of moral arguments on trust in leaders. Another direction for future research could be to explore how country-level social network structure might influence our results. Individuals in countries with a higher kinship index 74 and a more family-oriented social network structure, for example, might be less likely to trust utilitarian leaders, especially when the utilitarian solution conflicts with more local moral obligations.

There are several important limitations to the generalizability of our findings. First, although our samples were broadly nationally representative for age and gender (with some exceptions; see Results), we did not assess representativeness of our samples on a number of other factors including education, income and geographic location. Second, while our results do concord with the limited existing research examining the effects of endorsing instrumental harm and impartial beneficence on perceived suitability as a leader 37 , and held across different examples of our pandemic-specific dilemmas, it of course remains possible that different results would be seen when judging leaders’ responses in other types of crises (for example, violent conflicts, natural disasters or economic crises) or at different stages of a crisis (for example, at the beginning versus later stages). Third, the reported experiments tested how responses to moral dilemmas influenced trust in anonymous, hypothetical political leaders. In the real world, however, people form and update impressions of known leaders with a history of political opinions and behaviours, and it is plausible that inferences of trustworthiness depend not just on a leader’s recent decisions but also on their history of behaviour, just as classic work on impression formation shows that the same information can lead to different impressions depending on prior knowledge about the target person 75 . Furthermore, we did not specify the gender of the leaders in our experiments (except in the voting task for China and for the Hebrew and Arabic translations, where it is not possible to indicate ‘leader’ without including a gendered pronoun; here it was translated in the masculine form). Past work conducted in the United States suggests that participants may default to an assumption that the leader is a man 76 , but it will be important for future work to assess whether men and women leaders are judged differentially for their moral decisions. Because women are typically stereotyped as being warmer and more communal than men 77 , it is plausible that women leaders would face more backlash for making ‘cold’ utilitarian decisions, especially in the domain of instrumental harm. Fourth, because the current work focused on trust in political leaders, it remains unclear how utilitarianism would impact trust in people who occupy other social roles, such as medical workers or ordinary citizens. Fifth, and finally, it could be interesting to explore further the connection between impartial beneficence and intergroup psychology, especially with regards to teasing apart ‘impartiality’ and ‘beneficence’. For example, even holding beneficence constant, a leader who advocates for impartially sharing resources with a rival country may be perceived differently from one who impartially shares with an allied country (and, while speculative, this distinction might explain why Israel was an outlier in impartial beneficence, being a country in a region with ongoing local conflicts).

Our results have clear implications for how leaders’ responses to moral dilemmas can impact how they are trusted. In times of global crisis, such as the COVID-19 pandemic, leaders will necessarily face real, urgent and serious dilemmas. Faced with such dilemmas, decisions have to be made, and our findings suggest that how leaders make these judgements can have important consequences, not just for whether they are trusted on the issue in question but also more generally. Importantly, this will be the case even when the leader has little direct control over the resolution. While a national leader (for example, a president or prime minister) has the power and responsibility to resolve some moral dilemmas with policy decisions, not all political leaders (for example, as in our study, local mayors) have that power. A leader with little ability to directly impact the resolution of a moral dilemma might consider that voicing an opinion on that dilemma could reduce their credibility on other issues that they have more power to control.

To conclude, we investigated how trust in leaders is sensitive to how they resolve conflicts between utilitarian and non-utilitarian ethical principles in moral dilemmas during a global pandemic. Our results provide robust evidence that utilitarian responses to dilemmas can both erode and enhance trust in leaders: advocating for sacrificing some people to save many others (that is, instrumental harm) reduces trust, while arguing that we ought to impartially maximize the welfare of everyone equally (that is, impartial beneficence) increases trust. Our work advances understanding of trust in political leaders and shows that, across a variety of cultures, it depends not just on whether they make moral decisions but also which specific moral principles they endorse.

Our research complies with all relevant ethical regulations. The study was approved by the Yale Human Research Protection Program Institutional Review Board (protocol IDs 2000027892 and 2000022385), the Ben-Gurion University of the Negev Human Subjects Research Committee (request no. 20TrustCovR), the Centre for Experimental Social Sciences Ethics Committee (OE_0055) and the NHH Norwegian School of Economics Institutional Review Board (NHH-IRB 10/20). Informed consent was obtained from all participants.

An overview of the experiment is depicted in Extended Data Fig. 1. After selecting their language, providing their consent and passing two attention checks, participants were told that they would “read about three different debates that are happening right now around the world”, that they would be given “some of the justifications that politicians and experts are giving for different policies”, and that they would be “ask[ed] some questions about [their] opinions”. They then completed two tasks measuring their trust in leaders expressing either utilitarian or non-utilitarian opinions (one using a behavioural measure and one using self-report measures, presented in a randomized order); these tasks were followed by questions about their impressions about the ongoing pandemic crisis, as well as individual difference and demographic measures, as detailed below. Data collection was performed blind to the conditions of the participants.

Both behavioural and self-report measures of trust involved five debates on the current pandemic crisis, three of which involved instrumental harm (IH) and two impartial beneficence (IB) (summarized in Fig. 1c and Table 1; for full text, see Supplementary Methods). Each of these five dilemmas were based on real debates that have been occurring during the COVID-19 pandemic, and we developed the philosophical components of each argument in consultation with moral philosophers.

See Supplementary Notes 2 and 6–9 for further details of why we chose these specific dilemmas and how they can test our theoretical predictions.

Where the survey was administered in a non-English-speaking country, study materials were translated following a standard forward- and back-translation procedure 78 . First, for forward translation, a native speaker translated materials from English to the target language. Second, for back translation, a second native translator (who had not seen the original English materials) translated the materials back into English. Results were then compared, and if there were any substantial discrepancies, a second forward- and back-translation was conducted with translators working in tandem to resolve issues. Finally, the finished translated and back-translated materials were checked by researchers coordinating the experiment for that country.

Participants were randomly and blindly assigned to one of four conditions in the beginning of the experiment. These conditions corresponded to a 2 × 2 between-subjects design: 2 (moral dimension in the voting task: instrumental harm/impartial beneficence) × 2 (argument in the self-report task: utilitarian/non-utilitarian). In addition, we randomized the order of tasks (voting or self-report task first), the order of arguments in the voting task (utilitarian or non-utilitarian first), the order of dilemmas in the self-report task (Lockdown, Ventilators or Tracing first if instrumental harm, and PPE or Medicine first if impartial beneficence) and the dilemmas displayed (two in the self-report task and one in the voting task randomly chosen among Lockdown, Ventilators and Tracing if instrumental harm, and PPE and Medicine if impartial beneficence). This design allowed us to minimize demand characteristics with between-subjects manipulations of key experimental factors while at the same time maximizing efficiency of data collection.

We included two attention checks prior to the beginning of the experiment. Any participants who failed either of these were then screened out immediately. First, participants were told:

“In studies like ours, there are sometimes a few people who do not carefully read the questions they are asked and just ‘quickly click through the survey.’ These random answers are problematic because they compromise the results of the studies. It is very important that you pay attention and read each question. In order to show that you read our questions carefully (and regardless of your own opinion), please answer ‘TikTok’ in the question on the next page”

Then, on the next page, participants were given a decoy question: “When an important event is happening or is about to happen, many people try to get informed about the development of the situation. In such situations, where do you get your information from?”. Participants were asked to select among the following possible answers, displayed in a randomized order: TikTok, TV, Twitter, Radio, Reddit, Facebook, Youtube, Newspapers, Other. Participants who failed to follow our instructions and selected any answer other than the instructed one (“TikTok”) were then screened out of the survey. Second, participants were asked to read a short paragraph about the history and geography of roses. On the following page, they were asked to indicate which of six topics was not discussed in the paragraph. Participants who answered incorrectly were then screened out of the survey (with the exception of those who participated via Prolific, who were instead allowed to continue due to platform requirements).

Both the voting and self-report tasks began with an introduction to a specific dilemma. In the voting task, participants viewed a single dilemma, and in the self-report task, participants viewed two dilemmas in randomized order (see Extended Data Fig. 1 for details). No participant saw the same dilemma in both the voting and self-report tasks.

The dilemma introduction consisted of a short description of the dilemma (for example, in the PPE dilemma: “Imagine that […] there will soon be another global shortage of personal protective equipment [… and] political leaders are debating how personal protective equipment should be distributed around the globe.”), followed by a description of two potential policies (for example, in the PPE dilemma, US participants read: “[S]ome are arguing that PPE made in American factories should be sent wherever it can do the most good, even if that means sending it to other countries. Others are arguing that PPE made in American factories should be kept in the U.S., because the government should focus on protecting its own citizens.”).

After reading about the dilemma, participants were asked to provide their own opinion about the best course of action (“Which policy do you think should be adopted?”), answered on a 1–7 scale, with the endpoints (1 and 7) representing strong preferences for one of the policies (for example, in the PPE dilemma, they were labelled “Strongly support U.S.-made PPE being reserved for protecting American citizens” and “Strongly support U.S.-made PPE being given to whoever needs it most”, respectively), and the midpoint (4) representing indifference (“Indifferent”). See Supplementary Note 13 for further details. As an exploratory measure that is not analysed for the purposes of the current report, participants also indicated how morally wrong it would be for politicians to endorse the utilitarian approach in each dilemma.

For full text of dilemmas and introduction questions, see Supplementary Methods.

Our behavioural measure of trust in the current studies is based on a novel task with two types of participants: voters and donors. Voters were asked to cast a vote for a leader who would be responsible for making a charitable donation to UNICEF on behalf of a group of donors and would have the opportunity to ‘embezzle’ some of the donation money for themselves (Fig. 1d).

We collected data from donors first. A few days before we ran our main experiment, a convenience sample of US participants (N = 100) was recruited from Prolific and was provided with a US$2 bonus endowment. They were given the opportunity to donate up to their full bonus to UNICEF. After making their donation decision, they read about the five COVID-19 dilemmas, in randomized order, and indicated which policy they thought should be adopted. Finally, they were instructed that they might be selected to be responsible for the entire group’s donations to UNICEF. Participants were told that, if they were selected, they would have the opportunity to keep up to the full amount of total group donations for themselves, and were asked to indicate how much of the group’s donations they would keep for themselves if they were selected to be responsible.

Our main experiment focused on the behaviour of voter participants. In the voting task, participants were randomly assigned to read about one dilemma, randomly selected amongst the five dilemmas summarized in Table 1. After completing the dilemma introduction, participants were asked to “make a choice that has real financial consequences” and told that “[a] few days ago, a group of 100 people were recruited via an international online marketplace and invited to make donations to the charitable organization UNICEF. In total, they donated an amount equivalent to $87.89”. We instructed participants that we would like them to “vote for a leader to be responsible for the entire group’s donations”. Crucially, they were also told that “[t]he leader has two options: They can transfer the group’s $87.89 donation to UNICEF in full, or [t]hey can take some of this money for themselves (up to the full amount) and transfer whatever amount is left to UNICEF”. The exact donation amount was determined by the actual donation choices of the donor participants.

Following these details, participants were asked to cast a vote for the leadership position between two people who had also read about the same dilemma they had just read about. Participants were instructed that one person agreed with the utilitarian argument while the other person agreed with the non-utilitarian argument. This information was displayed to participants on the same page, in a randomized order. Participants were then asked to vote for the person they wished to be responsible for the group’s donations. We instructed participants that we would later identify the winner of the election, and implement their choice by distributing payments to the leader and UNICEF accordingly.

After completing the voting task, voter participants were asked the following comprehension question: “In the last page, you were asked to choose a leader that will be entrusted with the group’s donation. Please select the option that best describes what the leader will be able to do with the donation”. They were asked to select between three options, displayed in randomized order:

We excluded voter participants who failed to select the correct answer (1), as per our exclusion criteria (Exclusions). Note that in our stage 1 Registered Report the answer choices were slightly different, but we revised them after discovering in a soft launch that participants were systematically choosing one of the incorrect options, suggesting that the question was poorly worded. In consultation with the editor, we clarified the response options and began the data collection procedure anew. This was one of only three deviations from the stage 1 report (the others being that data collection took four weeks instead of the two weeks we had anticipated, and the use of Prolific instead of Lucid for recruitment in the United Kingdom and the United States).

After collecting the votes from the voter participants, we randomly selected ten donor participants to be considered for the leadership position: one who endorsed the utilitarian position for each of the five dilemmas and one who endorsed the non-utilitarian position for each of the five dilemmas. After tallying the votes from voter participants, we implemented the choices of each of the elected leaders and made the payments accordingly. For full text of instructions and questions for both the donor and the voting task, see Supplementary Methods.

Participants read about two dilemmas on the dimension of utilitarianism that they did not encounter in the voting task. That is, participants assigned to an instrumental harm dilemma (Lockdown, Ventilators or Tracing) for the voting task read both impartial beneficence dilemmas (PPE and Medicine) for the self-report task, while participants assigned to an impartial beneficence dilemma (PPE or Medicine) for the voting task read a randomly assigned two out of three instrumental harm dilemmas (Lockdown, Ventilators and Tracing) for the self-report task. The structure of the introduction to the dilemmas was identical to that in the voting task: they read a short description of the issue, followed by a description of two potential policies. On separate screens, they were asked which policy they themselves support.

After providing their own opinions, participants were asked to imagine that the mayor of a major city in their region was arguing for one of the two policies, providing either a utilitarian or non-utilitarian argument. Each participant was randomly assigned to read about leaders making either utilitarian or non-utilitarian arguments in both dilemmas presented in the self-report task. After reading about the leader’s opinion and argument, they were then be asked to report their general trust in the leader (“How trustworthy do you think this person is?”), to be answered on a 1–7 scale, with labels “Not at all trustworthy”, “Somewhat trustworthy” and “Extremely trustworthy” at points 1, 4 and 7, respectively. On a separate page they were then asked to report their trust in the leader’s advice on other issues (“How likely would you be to trust this person’s advice on other issues?”), to be answered on a 1–7 scale, with labels “Not at all likely”, “Somewhat likely” and “Extremely likely” at points 1, 4 and 7, respectively.

After completing the self-report task, participants were asked the following comprehension question: “In the last page, you read about a mayor in a city in your region, and were asked about them. Please select the option that best describes the questions you were asked”. Their options, displayed in a randomized order, were: (1) “How much I agreed with the mayor”, (2) “How much I trusted the mayor”, and (3) “How much I admired the mayor”. This allowed us to exclude participants who failed to select the correct answer (2), as per our exclusion criteria (Exclusions).

For full text of instructions and questions for the self-report task, see Supplementary Methods.

To assess their attitudes toward and experience with the pandemic, participants were asked three questions. Two measured how concerned participants currently felt about the pandemic, on both health-related and economic grounds (“How concerned are you about the health-related consequences of the COVID-19 pandemic?” and “How concerned are you about the financial and economic consequences of the COVID-19 pandemic?”, both to be answered on a 1–7 scale, with labels “Not at all” and “Very much” at points 1 and 7, respectively). The third question measured their personal involvement (“Have you or anyone else you know personally suffered significant health consequences as a result of COVID-19?”, to be answered by selecting one of three options: “Yes”, “No” and “Unsure”).

All participants then completed the Oxford Utilitarianism Scale 33 . The scale consists of nine items in two subscales: instrumental harm (OUS-IH) and impartial beneficence (OUS-IB). The OUS-IB subscale consists of five items that measure endorsement of impartial maximization of the greater good, even at great personal cost (for example, “It is morally wrong to keep money that one doesn’t really need if one can donate it to causes that provide effective help to those who will benefit a great deal”). The OUS-IH subscale consists of four items relating to willingness to cause harm so as to bring about the greater good (for example, “It is morally right to harm an innocent person if harming them is a necessary means to helping several other innocent people”). Participants viewed all questions in a randomized order, and answered on a 1–7 scale, with labels “Strongly disagree”, “Disagree”, “Somewhat disagree”, “Neither agree nor disagree”, “Somewhat agree”, “Agree” and “Strongly agree”.

All participants were asked to report their gender, age, years spent in education, subjective SES, education (on the same scale, but with minor changes in the scale labels across countries), political ideology (using an item from the World Values Survey) and religiosity. These questions were the same across countries and represent the demographics used as covariates in the main analyses. Additionally, participants were asked to indicate their region of residence (for example for the United States, “Which US State do you currently live in?”), and ethnicity/race, with the specific wording and response options depending on the local context (in France and Germany, this was not collected due to local regulations). In addition, participants were asked to confirm their country of residence, which allowed us to exclude participants who reported living in a country different from that of intended recruitment, as per our exclusion criteria (Exclusions).

Finally, participants were asked a series of debriefing questions. Two of these assessed their participation in other COVID-related studies (“Approximately how many COVID-related studies have you participated in before this one?”, answered by selecting one of the following options: “0”, “1–5”, “6–10”, “11–20”, “21–50”, “More than 50” and “I don’t remember”, and “If you have participated in any other COVID-related studies, how similar were they to this one?”, to be answered by selecting one of the following options: “Extremely similar”, “Very similar”, “Moderately similar”, “Slightly similar”, “Not at all similar” and “Not applicable”).

An additional question assessed participants’ attitudes towards the charity involved in the voting task (“How reliable do you think UNICEF is as an organization in using donations for helping people?”, answered on a 1–5 scale, with labels “Not reliable at all”, “Somewhat reliable” and “Very reliable” at points 1, 3 and 5, respectively).

We planned to exclude data either at the participant level as outlined in Sampling plan section, based on criteria 1 (duplicate response), 2 (different residence) and 3 (partial completion), or on an analysis-by-analysis basis as outlined in criteria 4 (missing variables) and 5 (failed comprehension checks).

All participants’ responses were analysed, regardless of whether they were statistical outliers.

Composite measures of self-reported trust were created by averaging responses to the two trust questions (trustworthiness of the leader and trust in the leader’s advice on other issues), separately for each participant and dilemma. In addition, we created composite OUS scores for each participant by averaging their responses on the scale items, separately for the instrumental harm (four items) and impartial beneficence subscales (five items).

We planned to examine behavioural measures and self-report measures of trust in two separate models. For testing our hypotheses across all countries, we set a significance threshold of α = 0.0025 (Bonferroni corrected for two tests). All analyses were conducted in R using the packages lme4 79 , lmerTest 80 , estimatr 81 , emmeans 82 , ggeffects 83 , ri2 84 and glmnet 85 . We planned that, in the event of convergence or singularity issues, we would supplement the theoretically appropriate models described below with simplified models by reducing the complexity of the random-effects structure 86 .

To examine participants’ self-reported trust in the leaders, we planned to examine the composite measure of their trust in each leader (that is, the average of the two trust questions, computed separately for each participant and dilemma). We hypothesized that participants would report higher trust in non-utilitarian leaders compared with utilitarian leaders in the context of dilemmas involving instrumental harm, while the opposite pattern would be observed for impartial beneficence. To test this hypothesis, we planned to conduct a linear mixed-effects model of the effect of argument type (utilitarian versus non-utilitarian), dimension type (instrumental harm versus impartial beneficence) and their interaction on the composite score of trust, adding demographic variables (gender, age, education, subjective SES, political ideology and religiosity) and policy support as fixed effects and dilemmas and countries as random intercepts, with participants nested within countries. In addition, we planned to run a model that included countries as random slopes of the two main effects and the interactive effect. We said that, should the model converge and should the results differ from the simpler model proposed above, we would compare model fits using the Akaike information criterion (AIC) and retain the model that better fits the data, while still reporting the other in supplementary materials. We planned to follow up on significant effects with post hoc comparisons using Bonferroni corrections. For the purposes of the analysis, we used effect coding such that, for argument type, the non-utilitarian condition was coded as −0.5 and the utilitarian condition as 0.5, and for the dimension type, instrumental harm was coded as −0.5 and impartial beneficence as 0.5. The demographic covariates were grand-mean-centred; the gender variable was dummy coded with “woman” as baseline. P values were computed using Satterthwaite’s approximation for degrees of freedom as implemented in lmerTest. For analysis code, see https://osf.io/m9tpu/.

To examine participants’ trust in the leaders as demonstrated by their behaviour, we planned to examine their choices in the voting task, where they were asked to select which of two leaders (one making a utilitarian argument and the other a non-utilitarian one) to entrust with a group charity donation. We hypothesized that participants would be more likely to select the non-utilitarian leader over the utilitarian leader when reading about their arguments for dilemmas involving instrumental harm, while the opposite pattern would be observed for impartial beneficence. To test this hypothesis, we planned to conduct a generalized linear mixed-effects model with the logit link of the effect of dimension type (instrumental harm versus impartial beneficence) on the leader choice (utilitarian versus non-utilitarian), adding demographic variables (gender, age, education, subjective SES, political ideology and religiosity) and policy support as fixed effects and dilemmas and countries as random intercepts, with participants nested within countries. In addition, we said we would also run a model that includes countries as random slopes of the effect of dimension type. Should the model converge and should the results differ from the simpler model proposed above, we planned to compare model fits using the Akaike information criterion (AIC) and retain the model that better fits the data, while still reporting the other in supplementary materials. Based on recent reports that linear models might be preferable to logistic models in treatment designs 63,64 , we said we would run the same analysis using a linear model (instead of logit link) with the identical fixed and random effects and again adjudicate between the models using the AIC. We planned to follow up on any significant effects observed with post hoc comparisons using Bonferroni corrections. For the purposes of this analysis, we planned to use effect coding such that, for the binary response variable of argument type, the non-utilitarian trust response was coded as 0 and the utilitarian trust response as 1, and for the dimension type, instrumental harm was coded as −0.5 and impartial beneficence as 0.5. Again, the demographic covariates were grand-mean-centred; the gender variable was dummy coded with “woman” as baseline. P values were computed using Satterthwaite’s approximation for degrees of freedom as implemented in lmerTest. For analysis code, see https://osf.io/m9tpu/.

Because there was evidence that public perceptions of lockdowns at the time of data collection were changing relative to July 2020 when we ran our pilots 87,88 , which may affect responses to the Lockdown dilemma, we planned to examine the robustness of our findings using two variations of the models described above, one that includes the Lockdown dilemma and another that omits it.

As some of the countries in our sample already implement mandatory and/or invasive contact tracing schemes at the time of writing (China, India, Israel, Singapore and South Korea), which may affect responses to the Tracing dilemma, we also planned to examine the robustness of our findings in these countries using two variations of the models described above, one that includes the Tracing dilemma and another that omits it. Furthermore, in this subset of countries we planned to examine an order effect to test whether completing the Tracing dilemma in the first task affects behaviour on the subsequent task.

In the event of non-significant results from the approaches outlined above, we planned to employ the TOST procedure 89 to differentiate between insensitive versus null results. In particular, we planned to specify lower and upper equivalence bounds based on standardized effect sizes set by our SESOI (Power analysis and Table 2). For each of our two tasks, should the larger of the two P values from the two t tests be smaller than α = 0.05, we would conclude statistical equivalence. For example, the minimum guaranteed sample size (N = 12,600; see Sample size for details) would give us over 95% power to detect an effect size of d = 0.05 in the self-report task, yielding standardized ΔL = −0.05 and ΔU = 0.05, and an OR of 1.30 in the voting task, yielding standardized ΔL = −0.15 and ΔU = 0.15.

We planned to complete the study online with participants in the following countries: Australia, Brazil, Canada, Chile, China, Denmark, France, Germany, India, Israel, Italy, the Kingdom of Saudi Arabia, Mexico, the Netherlands, Norway, Singapore, South Africa, South Korea, Spain, the United Arab Emirates, the United Kingdom and the United States (Fig. 1a). We sampled on every inhabited continent and included countries that have been more or less severely affected by COVID-19 on a variety of metrics (Supplementary Fig. 1). Country selection was determined primarily on a convenience basis. In April 2020, the senior author put out a call for collaborators via social media and email. Potential collaborators were asked whether they had the capacity to recruit up to 1,000 participants representative for age and gender within their home country. After the initial set of collaborators was established, we added additional countries to diversify our sample with respect to geographic location and pandemic severity.

We planned to recruit participants via online survey platforms (Supplementary Table 1) and compensate them financially for their participation in accordance with local standard rates. We aimed to recruit samples that were nationally representative with respect to age and gender where feasible. We anticipated that this would be feasible for many but not all countries in our study (see Supplementary Table 1 for details). We originally anticipated sampling to take place over a 14-day period, but to allow for more representative sampling (after discussion with the editor), we collected data over a period of 27 days (26 November 2020 to 22 December 2020). All survey materials were translated into the local language (see Translations for details). Prior to the survey, all participants read and approved a consent form outlining their risks and benefits, confirmed they agreed to participate in the experiment and completed two attention checks. Participants who failed to agree to the consent or failed to pass the attention checks were not permitted to complete the survey (with the exception of participants in the United States and the United Kingdom, who due to recruitment platform requirements were instead allowed to continue the survey, and were only excluded after data collection).

We informed our expected effect sizes by examining the published literature on utilitarianism and trust. Previous studies of social impressions of utilitarians reveal effect sizes in the range of d = 0.19–0.78 (mean d = 0.78 for the effect of instrumental harm on self-reported moral impressions; mean d = 0.19 for the effect of impartial beneficence on self-reported moral impressions; mean d = 0.55 for interactive effects of instrumental harm and impartial beneficence on self-reported moral impressions) 35,36,37,38,39 . However, there are several important caveats with using these past studies to inform expected effect sizes for the current study. First, past studies have measured trust in ordinary people, while we study trust in leaders, and there is evidence that instrumental harm and impartial beneficence differentially impact attitudes about leaders versus ordinary people 37 . Second, past studies have investigated artificial moral dilemmas, while we study real moral dilemmas in the context of an ongoing pandemic. Third, past studies have been conducted in a small number of Western countries (the United States, the United Kingdom and Germany), while we sample across a much wider range of countries on six continents. Finally, for the voting task, it is more challenging to estimate an expected effect size because no previous studies to our knowledge have used such a task.

Because of the caveats described above, we also informed our expectations of effect sizes with data from pilot 2, which was identical to the proposed studies in design apart from using The Red Cross instead of UNICEF in the voting task and the omission of the Tracing dilemma (see Pilot data in Supplementary Information for a full description of the pilot experiments). Pilot 2 revealed a conventionally medium effect size for the interaction between argument and moral dimension in the self-report task (B = 2.88, s.e. 0.24, t(452) = 11.80, P < 0.001, CI [2.41, 3.35], d = 0.55) and a conventionally large effect size for the effect of moral dimension in the voting task (B = 2.41, s.e. 0.33, z = 7.30, P < 0.001, CI [1.77, 3.13], OR 11.13, d = 1.33).

Sample size was determined based on a cost–benefit analysis considering available resources and expected effect sizes that would be theoretically informative 89 (Expected effect sizes). We aimed to collect the largest sample possible with resources available and verified with power analyses that our planned sample would be able to detect effect sizes that are theoretically informative and at least as large as expected based on prior literature (Power analysis). We expected to collect a sample of 21,000 participants in total, which conservatively accounting for exclusion rates up to 40% (Exclusions) would lead to a final guaranteed minimum sample of 12,600 participants.

We conducted a series of power analyses to determine the smallest effect sizes that our minimum guaranteed sample of 12,600 participants would be able to detect with 95% power and an α level of 0.005, separately for each main model (see Analysis plan for further details). To account for these two hypothesis tests, for all power analyses we applied Bonferroni corrections for two tests, thus yielding an α of 0.0025. Following recent suggestions 90,91 , results passing a corrected α of P ≤ 0.005 are interpreted as ‘supportive evidence’ for our hypotheses, while results passing a corrected α of P < 0.05 are interpreted as ‘suggestive evidence’. Power analyses were conducted using Monte Carlo simulations 92 via the R package simr 93 , with 1,000 simulations, using estimates of means and variances from pilot 2 (see Pilot data in Supplementary Information for a full description of the pilot experiments; note that, for the purposes of the current simulations, the race variable was omitted from data analysis because this variable is not readily comparable across countries). Data and code for power analyses can be found at https://osf.io/m9tpu/.

First, we considered the interactive effect of moral dimension (instrumental harm versus impartial beneficence) and argument (utilitarian versus non-utilitarian) on trust in the self-report task. We estimated that a sample of 12,600 participants would provide over 95% power to detect an effect size of d = 0.05 (power 99.3%, CI [98.56, 99.72]). This effect size is 9% of what we observed in pilot 2 and is the SESOI for the self-report task.

Next, we considered the effect of moral dimension (instrumental harm versus impartial beneficence) on leader choice in the voting task. We estimated that a sample of 12,600 participants would provide over 95% power to detect an odds ratio of 1.30 (power 95.8%, CI [94.36, 96.96]). This effect size is 9% of what we observed in pilot 2 and is the SESOI for the voting task.

Given that these SESOI values are detectable at 95% power with our guaranteed sample (total N = 12,600), are theoretically informative and are lower than our expected effect sizes (Expected effect sizes), we concluded that our sample is sufficient to provide over 95% power for testing our hypotheses and that our study is highly powered to detect useful effects.

At the time of submission, online survey platform representatives indicated that, while it is normally feasible to recruit samples nationally representative for age and gender in most of our target countries, due to the ongoing pandemic, final sample sizes may be unpredictable and in some countries it would not be possible to achieve fully representative quotas for some demographic categories, including women and older people (see Supplementary Table 1 for details). We planned that, if this issue arose, we would prioritize statistical power over representativeness. If we were unable to achieve representativeness for age and/or gender in particular countries, we planned to note this explicitly in the Results section.

We planned to exclude participants from all further analyses if they met at least one of the following criteria: (1) they had taken the survey more than once (as indicated by IP address or worker ID); (2) they reported in a question about their residence (further described in Design) that they lived in a country different from that of intended recruitment; (3) they did not answer more than 50% of the questions. In addition, participants would be selectively excluded from specific analyses if they (4) did not provide a response and are thus missing variables involved in the analysis or (5) failed the comprehension check (further described in Design) for the task involved in the specific analysis.

Further information on research design is available in the Nature Research Reporting Summary linked to this article.